The tools a data scientist uses can make his work more productive and high-quality in the rapidly evolving field of data science. Data science is the process of drawing insightful information from unstructured and structured data confidently with mixed programming, machine learning, and statistics. Thanks to several approaches of data scientists, they can cover the universal space of data by using multiple methods.

These instruments can model and visualize but also collect and clean data. This blog contains the top ten tools that every data scientist has to deal with and which can bring them career success, as well as their significance and practical uses.

1. Python

Python has, through the accessibility and adaptability that are provided by the rich library ecosystem, achieved a special status as a language of data science . Python is the basic tool for data science thanks to libraries like scikit-learn for machine learning, pandas for data management, and NumPy for numerical computation. Owing to the ease of the syntax, the language has become popular among beginners and experienced data scientists. Best Use

Case:

If you want to do anything from preparing and exploring data to creating machine learning models, Python is the best in every aspect of carrying out a data science project. Python can do anything from simple calculations to very complex deep-learning tasks.

Key Features:

● NumPy: You can use NumPy to solve big problems resulting in a theorem, such as viewing large multi-dimensional arrays and matrices as mathematics.

● Pandas: This lets you analyze and manipulate the data.

● Matplotlib/Seaborn: You can produce visuals with Matplotlib/Seaborn to present the data most effectively.

● Scikit-learn: This is the most efficient way to create machine learning programs, so you should use Scikit-learn.

● TensorFlow/Keras: The two of them that are perfect for AI and deep learning are Keras and TensorFlow.

2. R

R is a software environment and programming language for statistical computing and graphics. It is the preferred alternative because it does diverse statistical analyses and gives great visualizations in academic and research settings. Categories like healthcare, social sciences, and economics are the fields where data scientists with the explorer’s mindset should aim to have expertise in R, as the tool has ready-to-use elements ggplot2, dplyr, and caret. Best Use

Case:

The R language is especially suitable for interactive data visualization, statistical analysis, as well as hypothesis testing. It is widely used by researchers for in-depth statistical investigation and modeling.

Key Features:

● ggplot2: Here is how you can use ggplot2 for visualizing the data.

● dplyr: Of all the data manipulation options available, this is the easiest to use.

● Caret: This is a set of functions that help you to find the model which performs the best.

● Shiny: It is the tool that allows you to make a web-based application that is interactive and will respond to clicks and scrolls.

3. Microsoft Excel

Microsoft Excel’s time-saving features help everyone in the organization be more productive. Clear view and analysis of massive amounts of information are the striking points in Excel. It is an essential instrument for data preparation and reporting as it includes built-in facilities like pivot tables, data filtering, and complex formulae.

Best Use Case:

Excel is an easy and efficient way for users to sample the data, create a report, and analyze the data. Also, business analysts often use it for producing analytic reports and data visualizations to assist corporate executives in their decision-making process. Finally, Excel is even more important in organizations that miss a common data source to be shared and analyzed by many users.

Key Features:

● Pivot tables: Large data sets may be summarized with these tables.

● VLOOKUP and, INDEX-MATCH: VLOOKUP and INDEX-MATCH let you search for data and return a value based on that location, whether it is the UID of a user, a date, a location, etc.

● Power Query: This feature is used for reshaping and cleansing of data. Graphs and charts: Also, it is also used for creating simple visuals like diagrams, charts, and bar graphs.

4. Power BI

Power BI, a Microsoft business analytics product, is a great tool for data scientists to build interactive reports and dashboards. Moreover, with the help of its user-friendly features, all those who use Power BI are enabled to come up with robust and interactive reports and dashboards without having to write a single line of code.

This would be an added value to all of them since in addition to giving them the opportunity to deal with a variety of data sources such as Excel, SQL databases, and online services it will also provide Power BI as the ability to quickly make the needed decisions.

Best Use Case:

Power BI is the perfect platform for non-technical users to display dashboards, analyze corporate data, and even provide feedback for any coding assistance.

Key Features:

● Drag-and-drop interface: This tool is a time-saving one, as by just dragging and dropping the data, users can make beautiful and accurate diagrams quite easily.

● Real-time data streaming: When dealing with real-time data, speed and accuracy are the most crucial aspects.

● AI-driven insights: To do so the company will need to set up the forecast module and fit the output into Power BI by using the available settings to get the results of such a business plan.

5. MySQL

One of the most renowned relational database management systems (RDBMS) the world over is MySQL. This software is a proper one for pulling data and manipulating it from databases, and on the other hand, it is employed as a tool to manage structured information in tables. Data scientists should gain a good knowledge of SQL (Structured Query Language) to deal with databases effectively.

Best Use Case:

To companies with large structured datasets or those working with data pipelines, MySQL is the most preferred database management software to deliver the information filling the relational database design in an organized and consistent way.

Key Features:

● SQL querying: You can easily retrieve and alter data with SQL queries.

● Data normalization: To get rid of any duplication and thus reduce all the employees’ tasks.

● Subqueries and joins: The need for querying and connecting data of many tables should be fulfilled by using subqueries and joins.

6. Tableau

Data scientists can make dashboards that are collaborative, and dynamically shared with Tableau, a great data visualization tool. It is versatile and can make use of many types of data sources. Tableau also offers a beginner’s package that anyone can use to insert pictures, words, etc and so has an easy user interface.

Best Use Case:

In some cases, Tableau is used for composing well understandable data visualization reports, which makes it easy for non-tech stakeholders to learn the underlying ideas the report is communicating.

Key Features:

● Drag-and-drop interface: It is now possible to produce visualizations that include the necessary part of the information in a manner that is not only fast but also simplifies the process, by using the drag-and-drop interface.

● Live data connections: Data is updated in real time.

● Dashboards with customizable options: Users can make up their unique graphical reports using the customization options.

7. Jupyter Notebook

Data scientists can use Jupyter Notebook, a web-based development platform, to publish codes, equations, graphs, and narratives. Jupyter Notebook is compatible with multiple programming languages, including Python, the tool that is frequently employed by data scientists to run their tasks.

Best Use Case:

The hands-on approach and the visualization of mathematical and statistical principles make the Jupyter Notebook the ideal tool for machine learning prototyping, EDA, and sharing one’s results interactively and reproducibly.

Key Features:

● An interactive setting: to conduct coding experiments and to visualize the outcomes.

● Supports markdown: These also may be used to express your findings and the code.

● Integration with libraries: Include integration with libraries such as Scikit-learn, pandas, matplotlib, etc.

8. Google Colab

Out of various cloud-based Jupyter Notebook environments, one of which is Google Collab gives free access to the GPU and TPU and is very suitable for machine learning and deep learning activities. Everyone can see you and work on notebooks in real-time so it is also very useful for cooperation with other people.

Best Use Case:

This application is ideal for group data science projects, especially those with a focus on deep learning since high computing power is required.

Key Features:

● Free GPU/TPU access: To cut the time of the training of machine learning models significantly.

● Cloud-based: You can enter it from anywhere and for not configuring it at all.

● Collaboration: The possibility of sharing and editing notebooks in real-time.

9. KNIME

The open-source analytics platform KNIME, which is an open-source project, offers a visualized supervisory system for data operations. KNIME also manages to bring further functionalities to the already powerful tools it is built around by integrating the project with languages like R and Python, which are usually used for very sophisticated analytics.

Best Use Case:

KNIME is a good choice for automating programs that handle data pre-processing, transformation, and modeling. This is especially true when working with a big dataset.

Key Features:

● Drag-and-drop interface: Yet, KNIME goes one step further and explicitly sets the drag-and-drop manner of creating the workflow for data analysis tasks.

● Python/R integration: Depending on the task and levels of complexity, Python and R would be the best options for this job.

● Large community and support: The community has, in great numbers, a wide range of plugins that are very diverse in their applications.

10. Apache Spark

In the beginning, called a distributed computing platform, Apache Spark was the forerunner in handling colossal data sets fast. It is an in-memory processing tool and hence speeds up the process of data analysis. Spark also provides specialized libraries for real-time data streaming (Spark Streaming), graph processing (GraphX), and machine learning (MLlib).

Best Use Case:

Apache Spark is very helpful in big data analysis and real-time data processing, mainly in the healthcare, retail, and finance sectors, where enormous datasets have to be processed.

Key Features:

● In-memory processing: This makes it faster in processing the data.

● Machine learning and graph analytics: Accumulated under one umbrella are libraries such as graph analytics and machine learning for consolidated use in Spark.

● Distributed computing: A technology that involves distributing computation across networked computers to increase speed and processing power thereof is distributed computing.

Conclusion

The important computational programming to work with the software is written in Python and R. The representation of data is done with software such as Tableau, Power BI, and Excel. Massive processing, the accomplishment of automation, and the management of data will be accomplished by MySQL, Spark, and KNIME.

If a data scientist learns these technologies, they can increase their output. Start making data-driven decisions, and finally inform their stakeholders of the findings. These tools will act as a guide on your data science path, so no matter whether you are a newcomer to the field or you are looking to expand your toolset, they should be the first things that you should learn.

FAQs on Data Science

What significance does Python possess for data science?

One reason for the widespread application of Python in data science is that it is a simple language with easy-to-read syntax, which plays an important role in the area. It is born out of the need for a tool to translate and transform data into meaningful information, thus delivering critical information for a business to grow.

What is the difference between Google Colab and Jupyter Notebook?

To write and execute Python code, Jupyter Notebook is a local tool, while Google Colab is a cloud-based platform. That allows developers to work with Python code without any installations. Colab also offers free GPU access for machine learning projects.

Are you beginning your journey in data science? Understanding the right tools can streamline your learning and boost your efficiency. Whether you’re a student or a professional transitioning into the field, mastering these tools will set a solid foundation. Plus, if you aim to land your dream role, a data science recruitment agency can help connect you with top opportunities. Start exploring now and take your first step toward becoming a data science expert!

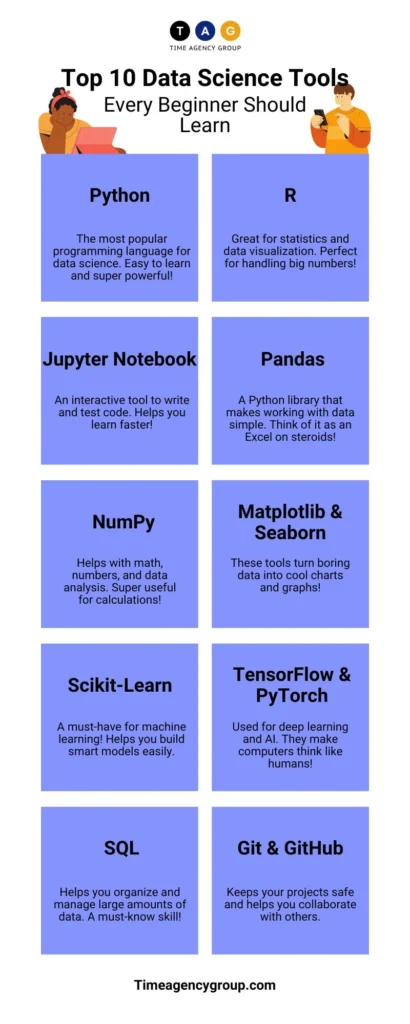

Infographic